As part of building our own tab-completion for Unity, I went down the rabbit hole of current tab-completion solutions, how they work, and how they are trained. This short story is about how Cursor acquired the best tab-complete solution on the market.

To tell this story, we have to go back to a time before LLMs were mainstream. In 2018, Jacob Jackson created a product called TabNine, one of the first code completion tools. For reference, the LLM awakening of ChatGPT happened 4 years later when OpenAI released an early demo of ChatGPT (30 November 2022). Jackson graduated from the University of Waterloo in 2019. While studying, he interned at Jane Street, Hudson River Trading, and OpenAI, AND started TabNine on the side. He got an early start in dev tools by making UI creation easier inside Jane Street.

Codota acquired Tabnine in November 2019, and Jackson moved to OpenAI as a Research Scientist for the next 2.5 years. He left OpenAI in 2022 to start Supermaven, a direct competitor to Cursor (also founded in 2022). Supermaven was never as popular as Cursor, but their tab-completion model, Babble, was the best on the market. In February 2024, when ChatGPT had a context window of 32k, Babble had a context window of 300k and a latency of 250ms. Compare that to the 1883ms latency of Cursor's model at the time!

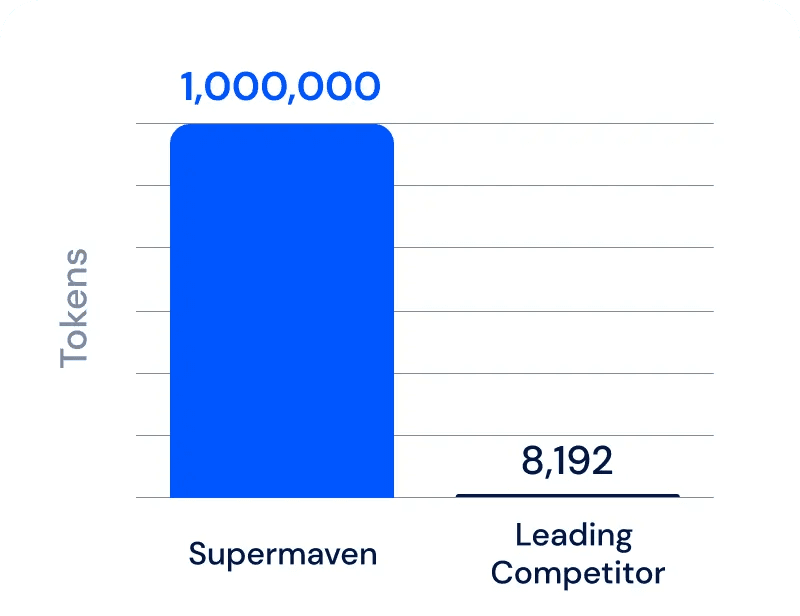

Right before Supermaven was acquired, their Babble boasted a 1M context window, which is absurd given that the popular (much slower) chat models were still at around 128k.

A key differentiator they had from the start was in how they trained Babble. Most providers use the Fill-in-the-Middle (FIM) approach to train their auto-complete models, but this is rather limited because the suggested edits will always be downward from where your caret (text cursor) is at the time. But what if you wanted to jump to the top of the file to import a new package? Or what if you wanted to jump to a new file entirely?

As a solution, Babble was trained on edit sequences instead of lines of code. More akin to what you'd see in a git diff. Since Cursor owns the entire editor, they are privy to the changes initiated, accepted, and rejected by developers in a project, putting them in pole position for training the best tab-completion model.

But, Cursor didn't really have the best model and all the while the Cursor founders and Jacob were having conversations and knew each from before the start of Supermaven. Jacob was planning to make his own IDE after hitting the limits of a plugin. The cursor team was probably hard at work trying to catch up with tab complete, so it was a match made in heaven.

And that's how Cursor got to the best tab-complete model on the market. Given Cursor's large customer base, they now have a huge data moat to keep their model the best. The main risk at the moment seems to be that we might change code-copilot workflows completely, as we're seeing with Cline.